The line between science fiction and reality is blurring faster than ever. Scientists at Stanford University have recently achieved a milestone that could redefine how humans interact with technology. In a groundbreaking study, researchers successfully decoded human thoughts using a brain-computer interface (BCI) — with no speech, movement, or gestures required.

This development has enormous potential, particularly for individuals with paralysis, locked-in syndrome, or severe speech impairments. Imagine a future where a person can send a text, control a wheelchair, or operate a computer simply by thinking. That future might not be as far away as we once imagined.

What Exactly Did the Study Achieve?

Previous BCI research often relied on movement-related brain signals. For instance, scientists would record neural activity when participants attempted to move their lips, tongue, or vocal cords. These signals were then converted into speech or text.

Stanford’s latest approach is different. Instead of depending on movement-related signals, researchers focused purely on decoding brain activity that corresponds to thought patterns. By using implanted electrodes, the team recorded neural firing patterns and fed this data into advanced machine-learning algorithms. The computer then interpreted these signals to generate accurate, actionable outputs — such as specific words or commands — without requiring any physical movement.

In other words, the study demonstrates that human thoughts alone — the brain’s internal electrical signals — are enough to be converted into digital actions.

a2zhealthylife.com | viralinformation.co.in | cyclo4fun.com

ibermoney.com | WebEraEnterprise.com | prediksibensintoto.com

How Brain-Computer Interface (BCI) Technology Works

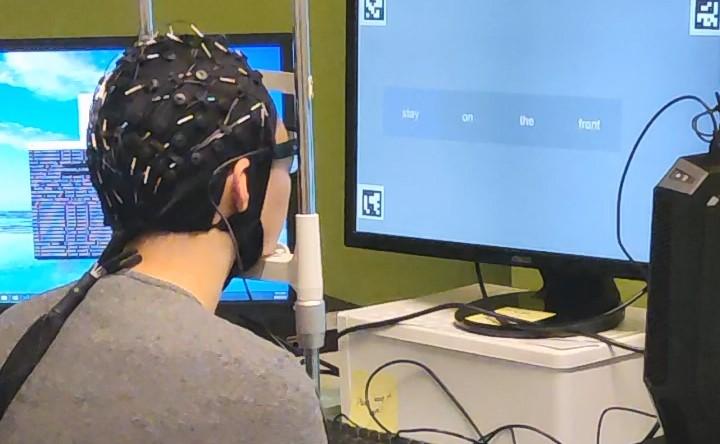

BCI technology relies on capturing and decoding electrical activity from the brain’s neurons. There are two main approaches:

-

Non-invasive methods – such as EEG (electroencephalography) caps worn on the head. These measure electrical signals from outside the skull.

-

Invasive methods – such as electrode arrays implanted directly into the brain’s motor or speech cortex for more precise readings.

Stanford’s study used an invasive approach for higher accuracy. Here’s a step-by-step look at how it works:

-

Signal Capture – Electrodes detect neuron firing patterns in real time.

-

Signal Processing – AI and machine-learning models filter out background noise and identify meaningful patterns.

-

Decoding – The software maps these patterns to specific thoughts, words, or intentions.

-

Execution – The decoded signal is converted into text on a screen, a computer command, or control input for a device.

Over time, the system learns the user’s neural patterns, becoming more accurate and faster.

Real-World Applications

The implications of this technology are immense. Here are some of the most promising use cases:

-

Assistive Communication – People with ALS (amyotrophic lateral sclerosis), locked-in syndrome, or severe speech impairments could “speak” by thinking.

-

Mobility Solutions – Wheelchairs, prosthetic limbs, and robotic arms could be controlled through thought, restoring independence to millions.

-

Smart Home Control – Turning on lights, operating televisions, and adjusting thermostats could be done mentally, offering new accessibility for people with disabilities.

-

Workplace Productivity – In the future, professionals might compose emails, write code, or navigate software purely through mental commands.

-

Gaming and Virtual Reality – BCIs could create fully immersive experiences by eliminating the need for physical controllers.

The Role of Companies Like Neuralink

Elon Musk’s company Neuralink has been pushing aggressively in this field, working to implant tiny, flexible threads into the brain to read and transmit neural signals. While Stanford’s research is academically driven, Neuralink is focused on commercializing such technology for everyday use.

The ultimate goal, according to Musk, is a seamless “symbiosis” between humans and artificial intelligence — a future where thought-to-text, thought-to-command, and even thought-to-thought communication become possible.

Privacy and Ethical Concerns

While the benefits are exciting, there are serious challenges and concerns that cannot be ignored:

-

Privacy Issues – If a computer can read your thoughts, who controls that data? Unauthorized access to someone’s neural data could have grave consequences.

-

Misinterpretation of Thoughts – Brain activity is complex, and decoding it accurately is difficult. A system misinterpreting signals could lead to unintended actions.

-

Health Risks – Invasive implants carry risks such as infection, scar tissue formation, or long-term brain irritation.

-

Consent and Security – There is a need for robust safeguards to ensure that a person’s thoughts are only read when they consent.

Stanford researchers have already taken steps toward addressing these issues by developing a mental “password” system — where decoding only happens if the user mentally authorizes it. This is a major advancement in ensuring safety and privacy.

The Challenges of Thought Decoding

Thought decoding is not as simple as flipping a switch. Each brain is unique, and neural patterns differ from person to person. That means BCI systems must be highly personalized.

-

Training Period – Users may need to spend hours or days training the algorithm to recognize their unique brain patterns.

-

Noise Filtering – The brain is constantly active. Differentiating between random thoughts and intentional commands is complex.

-

Scaling to Mass Adoption – Making the technology affordable, reliable, and easy to use outside of a lab setting remains a major hurdle.

Despite these challenges, researchers remain optimistic. Machine learning models are improving rapidly, and hardware is becoming smaller and safer.

Future Possibilities

Looking ahead, the potential of BCI is staggering:

-

Direct Thought-to-Text Messaging – Typing could become obsolete as people compose messages using pure thought.

-

Hands-Free Computing – Workers in dangerous or sterile environments could operate equipment without physical contact.

-

Cognitive Enhancement – Some experts envision BCIs being used not just for communication, but for improving memory, focus, and learning capacity.

-

Telepathic-Like Communication – In the long run, two people with BCIs might be able to share information brain-to-brain, bypassing speech entirely.

Such advances raise philosophical questions too: what does it mean for human identity when thoughts can be shared, stored, or analyzed like data?

Conclusion

Stanford University’s breakthrough is a powerful demonstration of what’s possible when neuroscience meets artificial intelligence. Computers can now decode human thoughts without any speech or physical movement, bringing us closer to a world where mind and machine work in perfect sync.

For millions of people with disabilities, this could be a game-changer, restoring their ability to communicate and control their environment. For society as a whole, it represents both an opportunity and a challenge — an opportunity to create more inclusive, accessible technology, and a challenge to protect the sanctity and privacy of human thought.

As BCI technology advances, one thing is clear: the way we interact with machines — and perhaps with each other — is about to undergo a transformation unlike anything we’ve seen before.